The previous blog post about the FlexiGroBots Platform mentioned the “Common application services” as one of its five main components. In this entry, we further explain the 9 common modules that are currently being implemented.

As mentioned in the previous entry, these common applications are software services designed for perception, decision and action tasks in agricultural robotics solutions using State of the Art Machine Learning algorithms. They are being developed as assets that can be reused in a wide range of agricultural robotic solutions, capable of being trained, stored, and deployed through MLOps pipelines (KubeFlow).

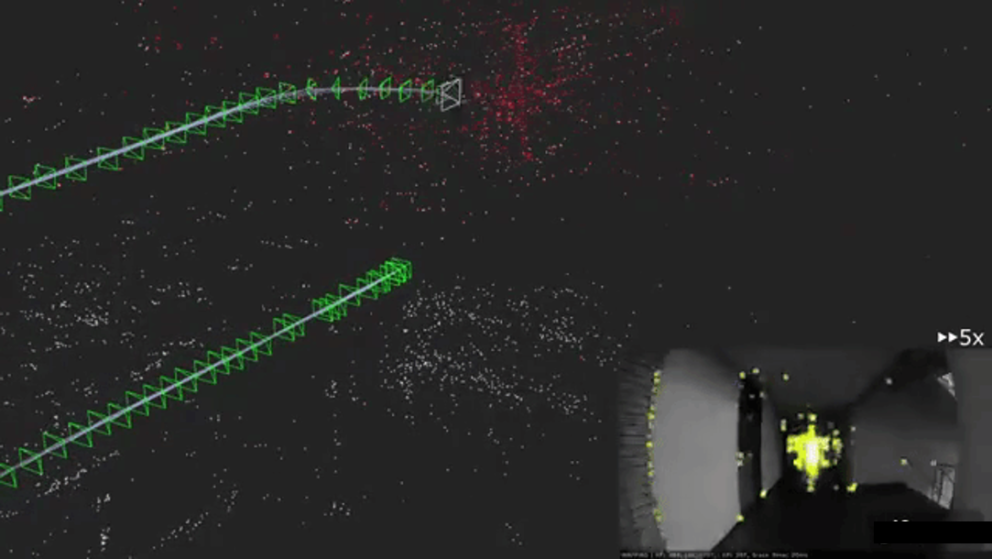

SLAM

The visual SLAM (Simultaneous Location and Mapping) module will implement computer vision algorithms to identify visual landmarks that can be used for locating a camera in real space. This will be used in cameras mounted on UGVs (Unmanned Ground Vehicles) to support GPS measurements and thus achieve more precise position localization in the field. The module creates a map using the visual references and locates the camera in the map using monocular RGB sensors.

People Detection, Location and Tracking

This module aims to increase the UGVs situation awareness through computer vision algorithms. The component will be able to detect people in images, track them through successive frames (video) and estimate their distance toward the camera centre. It works best at short range (under 6 meters) and it is designed to be applied on image feed from UGV cameras.

People Action Recognition

Also aimed to increase the UGVs situation awareness capabilities, this module uses Deep Learning models to detect people, estimate their pose and classify the actions they are carrying out. The current instance recognizes the following 9 actions: stand, walk, run, jump, sit, squat, kick, punch and wave. It works best in a relatively close distance (under 10 meters) and it is designed to be used in cameras mounted on UGVs.

Moving Objects Detection, Location and Tracking

This module, unlike the previous ones, is designed to be used from UAVs (Unmanned Aerial Vehicles). It is designed to increase the situation awareness capabilities of UGVs using aerial cameras that watch over the crop field from azimuthal view. It will be capable of detecting tractors, cars, people and miscellaneous farming equipment from a bird’s eye. In addition, the component will be able to track individual tractors through different frames and estimate distance of other moving objects towards them.

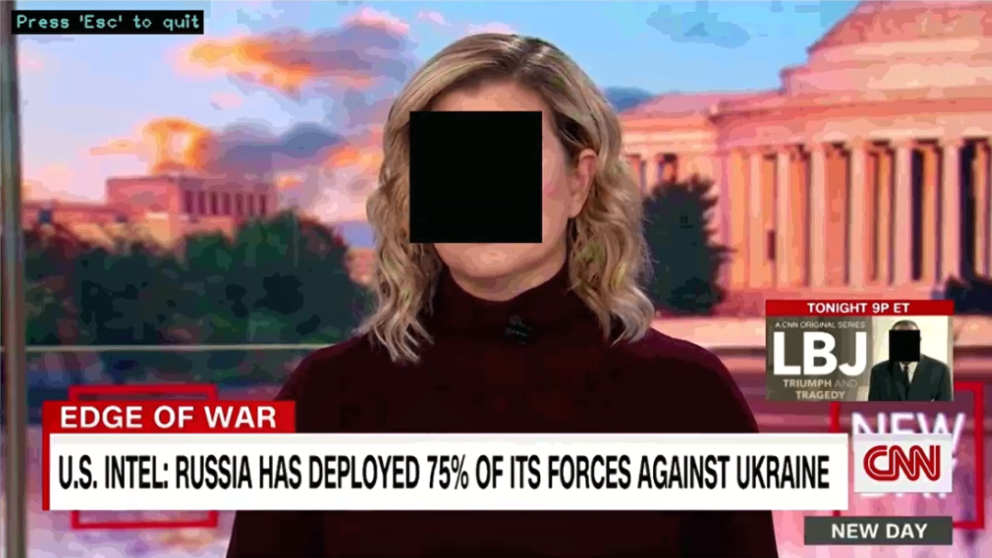

Anonymization Tool

The anonymization component is capable of blurring or directly erasing the pixel information around people’s faces. This prevents anyone or anything to effectively identify individuals in images. This utility module is aimed to increase personal data safety, making datasets compliant with GDPR. This can be applied to any image or video to anonymize the people’s faces to increase data protection.

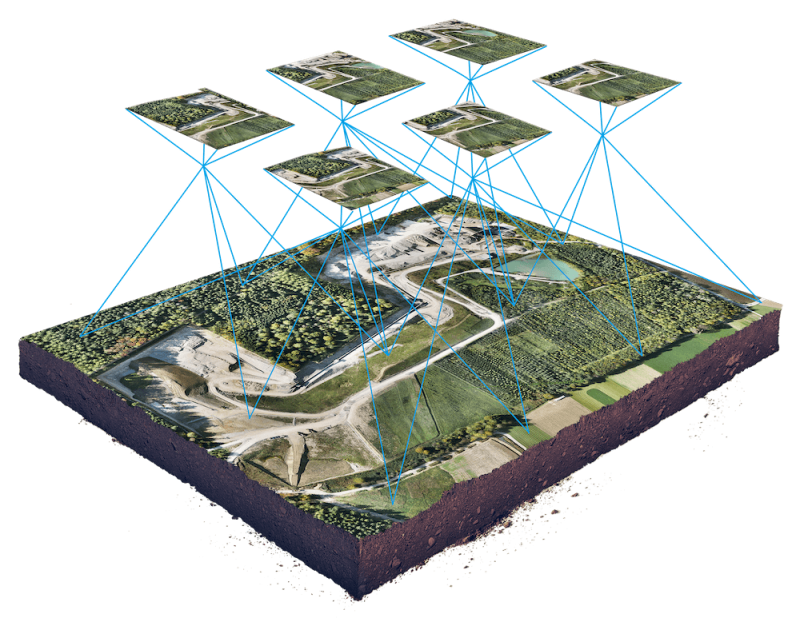

Orthomosaic Assessment Tool

This component is actually composed of two different modules. The first one is a software application that allows to automatically generate orthomosaics from a sequence of images. This image tiles or puzzle pieces need to be taken from a drone with a given angle, flight height and metadata for the module to work. The second part of the component is the proper orthomosaic assessment. It is an automated software that processes the image to extract insightful information and generate an assessment report. Some examples of processed information would be heatmaps that high

Generalization Components

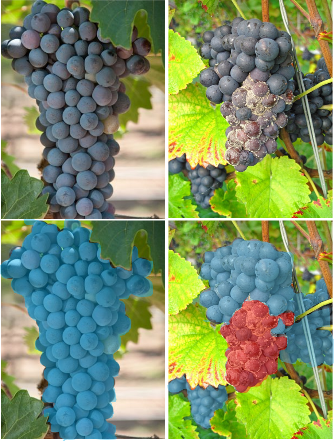

The last three components follow a common design, development, and deployment paradigm. Thus, it makes sense to explain them together. In each of the project Pilots, the partners are developing specific computer vision algorithms that fit their use-cases. Nevertheless, as part of the common application services, the consortium plans to generalize the specific components to make it available to a greater range of applications. This will be done by applying transfer learning and domain adaptation techniques to the Deep Convolutional Neural Networks (DCNNs) that are specifically trained to solve the Pilots’ use-cases. In the case of the FlexiGroBots Platform, we aim to generalize three components:

Disease Detection in Fruit

Insect Infestation Detection and Counting

Generalized from the Meligethes Aeneus detection and counting in rapeseed (Pilot 2).

Weed Detection in row-based plots

Generalized from the weed detection in blueberry farms (Pilot 3).