We are pleased to introduce the second version of the FlexiGroBots platform. This platform – which had already been featured in this blog post – aims to facilitate the use of adaptable and diverse multi-robot systems for the precise automation of agricultural operations. With regards to the second version of the FlexiGroBots platform prototype, several specific objectives have been accomplished. This includes the continuation of the configuration and adaptation of the most advanced technologies used in the development of the platform and its subsystems. Additionally, there has been a concerted effort to integrate the vision provided in D2.3 Requirements and platform architecture specifications to guarantee the development of an all-inclusive solution that integrates the various subsystems of the platform and the requirements from the Pilots. Furthermore, document D3.2 FlexiGroBots Platform v2 provides a comprehensive description of the second version of the platform.

Components of the platform

Over the past few months, considerable attention has been paid to integrating the various platform components and addressing the Pilots' requirements (some of the aspects that were most frequently highlighted during the review of the project at M18). The multi-robot management system components have also been further developed and integrated:

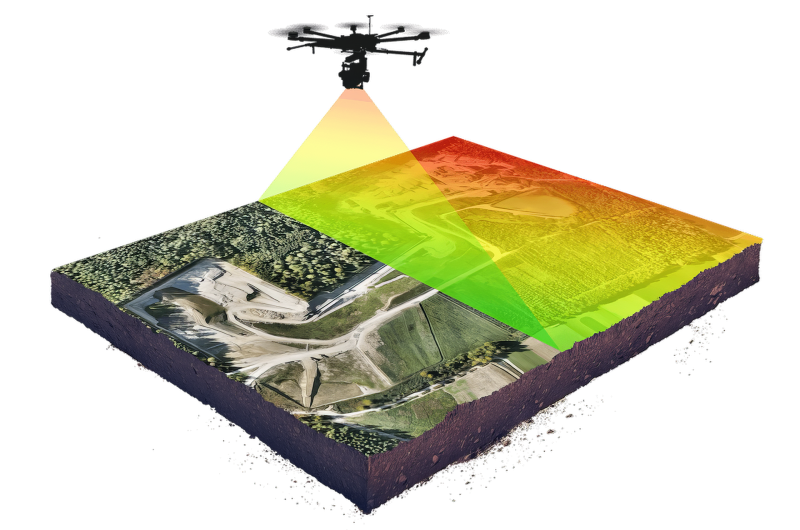

The Machine Learning Operations (MLOps) subsystem – relying on Kubeflow – has allowed to make significant strides in developing pipelines that address the complete lifecycle of ML models. These pipelines commence at the MinIO object storage component, where a connector for HTTP has been implemented to allow for the uploading of Pilots' datasets, such as UAV (Unmanned Aerial Vehicle) images. Subsequently, the data processing takes place in Kubeflow. The AI toolbox of this AI subsystem includes diverse Deep Learning libraries, Machine Learning algorithms, and libraries for processing geospatial and other data. Notably, complete pipelines have been developed within Kubeflow to facilitate the training and testing of image processing algorithms. These pipelines are replete with features such as hyperparameter optimization and model evaluation. Finally, the outcome of these pipelines –such as models and metrics– are stored in the MinIO object storage.

Efforts have also been focused on deploying existing solutions and models as KServe inference services, taking advantage of the GPU (Graphical Processing Unit) of the Kubernetes cluster. This progress means that the AI subsystem is ready not only for development but also for hosting Common application services that can be utilized by devices in Pilots. Consequently, the availability of the AI subsystem has also spurred efforts to develop agriculture-focused applications. This has involved prioritizing more specific applications such as pest, disease, and weed detection, and generating pipelines for segmentation and detection of these elements. Additionally, Zero-shot and open-vocabulary applications have been implemented to solve a wide range of problems in agriculture, beyond just fruits and crops. Different prototypes have been developed. Some have reached their final state, while others still require fine-tuning, and a few are being rethought due to inadequate results. The finished applications include an anonymization tool, an automatic dataset generation tool, and people detection localization and tracking for UGVs (Unmanned Ground Vehicles). Applications almost finished include an orthomosaic assessment tool, people action recognition, and mobile objects detection, location and tracking for UAVs. Applications under development include SLAM (Simultaneous Localization And Mapping) and virtualization, weed detection, pest detection, and disease detection in fruits.

The development of the Geospatial data management and processing services has been undertaken with a focus on enhancing the indexation process of UAV orthomosaics and related products collected for the Spanish pilot. This has been achieved through the utilization of the Open Data Cube functionality. A Python tool has been developed to easily generate the required Spatio-Temporal Assets Catalogue (STAC) metadata JSON files for different collections as new flight datasets become available in the system. Additionally, a set of UAV product definitions have been created, consisting of a YAML file containing the description of each UAV output and derived product. These definitions were used for registering the UAV products in the Open Data Cube and linking them with the STAC JSON files generated by the Python script. Lastly, new UAV flights and botrytis datasets were collected and utilized to feed the Open Data Cube and the MapServer service, which is responsible for serving the botrytis vector-based information.

The architecture of the Mission Control Center (MCC) (see this post for more information about this topic) has been refined. The system currently comprises mission workflow management and fleet management components, which communicate through FlexiGroBots Data Space and the MQTT broker, along with a robot task planner for planning individual robot tasks. These components can be further divided into subcomponents, including the mission workflow planner, mission workflow controller, mission reporter, mission repository, fleet supervisor, and fleet controller. The mission workflow description format, known as the Mission File, has been developed, and the MCC communication message formats for MCC status and command messages have been defined. Initial prototypes of the mission workflow manager and fleet manager have been developed. Moreover, a Matlab version of the robot task planner has been created.

The progress achieved with respect to the Common components has been predominantly concentrated on integration, with a particular focus on shifting the Data Space to production. As such, all systems designed by IDSA have been updated to their latest versions, while novel components have been refined to better facilitate their deployment in Kubernetes. Furthermore, the system has undergone testing with varying volumes of traffic through the connectors, in order to gain greater insight into how to bound the volume and adjust data transfer. Various connectors for pilots have also been integrated into the Data Space. Given that the Data Space is intended to serve as the binding agent for the various subsystems of the platform, a Python library aimed at linking the Data Space and MinIO object storage has been developed. Additionally, an API-REST-based architecture has been outlined to enable the integration of the AI subsystem with the Data Space. Furthermore, the integration of the MCC with the Data Space deployed by Atos has also been executed.

Find FlexiGroBots prototypes and future releases in the GitHub repository of the project.